MLOps

Rapyder’s MLOps as a Service will provide data teams an easy way to build, train, deploy, and monitor machine learning model pipelines across different platforms.

What is MLOps?

MLOPS refers to the combination of machine learning and operations. It is an approach to managing machine learning projects that bridges the gap between data scientists and operation teams and help ensure that models are reliable and easily deployed.

MLOps is a core function of Machine Learning engineering, focused on streamlining the process of taking machine learning models to production and maintaining and monitoring them.

Why Should You Use MLOps?

As you move from running individual AI/ML projects to transforming your business at scale by running multiple AI/ML projects, the discipline of ML Operations (MLOps) can help. MLOps solutions for the unique aspects of AI/ML projects in project management, CI/CD, and quality assurance, helping you improve delivery time, reduce defects, and make data science more productive. MLOps is a methodology built on applying DevOps practices to machine learning workloads.

Like DevOps, MLOps relies on a collaborative and streamlined approach to the machine learning development lifecycle, where the intersection of people, process, and technology optimizes the end-to-end activities required to develop, build, and operate machine learning workloads.

MLOps combines data science and data engineering with existing DevOps practices to streamline model delivery across the machine learning development lifecycle. MLOps is the discipline of integrating ML workloads into release management, CI/CD, and operations. MLOps requires integrating software development, operations, data engineering, and data science.

Benefits of MLOps

Adopting MLOps practices gives you faster time-to-market on ML projects, delivering the following benefits.

- Productivity: Providing self-service environments with access to curated data sets lets data engineers and scientists move faster and waste less time with missing or invalid data.

- Repeatability: Automating all the steps in the Machine Learning Development Life Cycle helps you ensure a repeatable process, including how the model is trained, evaluated, versioned, and deployed.

- Reliability: Incorporating CI/CD practices enrich the ability to deploy quickly and with increased quality and consistency.

- Auditability: Versioning all inputs and outputs, from data science experiments to source data to the trained model, means that we can demonstrate precisely how the model was built and where it was deployed.

- Data and model quality: MLOps lets us enforce policies that guard against model bias and track data statistical properties and model quality changes over time.

Interested? Click here to talk with Rapyder and avail our service offers and free cloud credits.

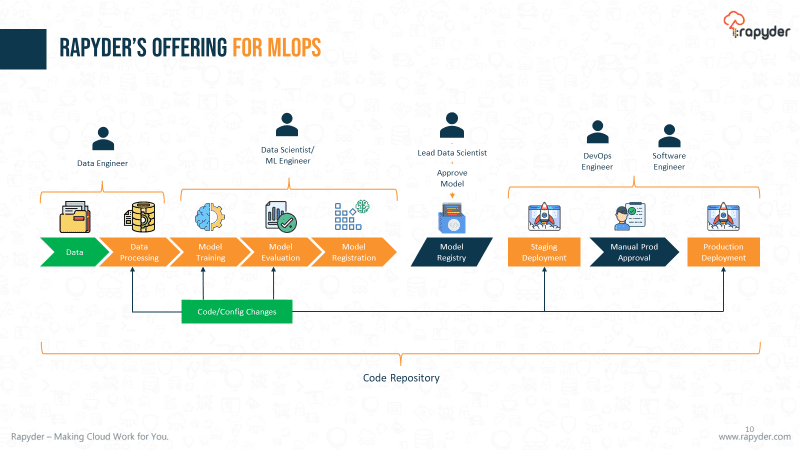

Rapyder’s MLOps Offering – MLOps Workload Manager

The MLOps Workload Manager solution is built on Amazon Sagemaker & AWS DevOps services which helps you streamline and enforce architecture best practices for the machine learning model. This solution is an extendable framework that provides a standard interface for creating & managing ML pipelines.

The solution’s template allows customers to

- Pre-process, train & evaluate models

- Upload their trained models (bring your model)

- Model configuration, deployment, and monitoring

- Configure and orchestrate the pipeline

- Monitor the pipeline’s operations

- Trigger the pipeline through new data upload and code changes.

This solution increases your team’s agility and efficiency by allowing them to repeat successful processes at scale.

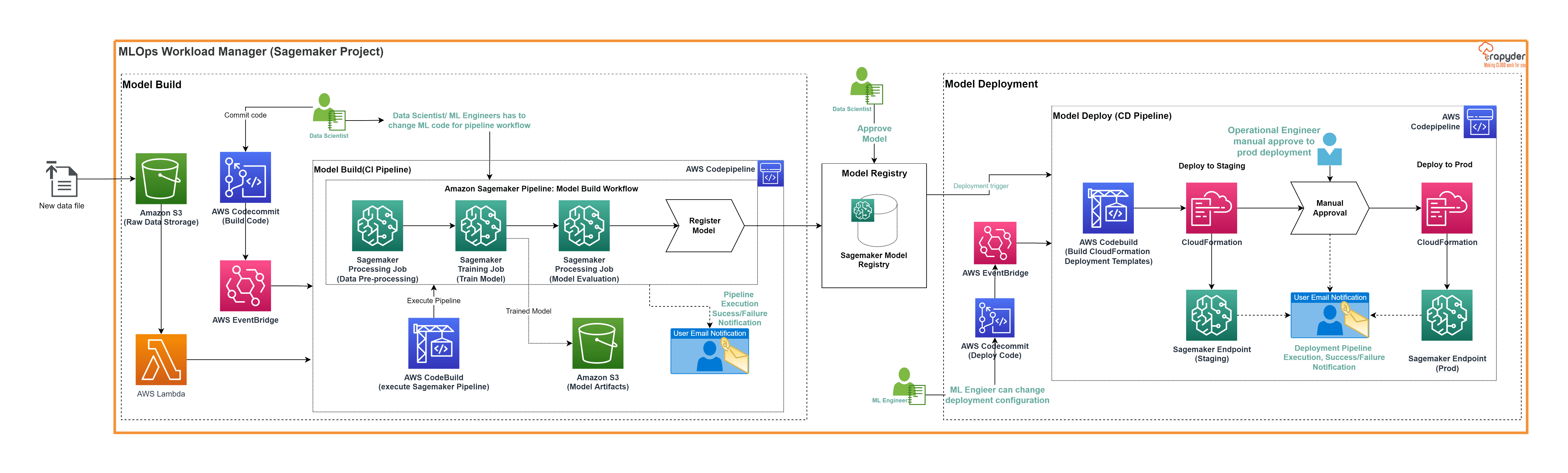

Flow Diagram:

MLOps Workload Overview:

There are three ways to trigger this workflow

1) Data Trigger: Whenever new data gets uploaded, it automatically triggers MLOps workflow, and the model gets built and deployed based on the new data.

2) Code Changes Trigger: Whenever a data scientist changes the code for pre-processing, model training, or evaluation, It will trigger this MLOps workflow, and the model gets built and deployed based on the new changes.

3) Deployment Changes: Whenever the ML engineer changes the deployment configuration. It will trigger this MLOps deployment workflow, and the model will deploy again based on the new deployment configuration.

Model Approval:

Once the model has been trained and evaluated, it will be registered in the model registry; then, after data scientist has to visit the registry and manually approve the model by examining a couple of metrics.

MLOps Workload Manager Components

Model Building:

- Pre-processing: Replace with your data cleansing script.

- Training: Replace with your custom training script.

- Evaluation: Model evaluation metrics can replace with your model evaluation script.

- Register Model: Store model versions and perform the model comparison.

Model Approval:

Once the model has been trained, evaluated, and registered in the model registry, data scientists can manually approve the model by examining relevant metrics.

Model Deployment:

- Staging Deployment: Perform user acceptance testing (UAT) at this stage.

- Production Approval: Manual approval on successful UAT.

- Production Deployment: This step will deploy the ML model to the production environment- flexibility to change environment configuration such as instance type (CPUs/GPUs) and count.

MLOps Workload Manager Architecture and AWS services

AWS Services:

- Amazon Sagemaker

- AWS CodeCommit

- AWS CodeBuild

- AWS CodePipeline

- AWS CloudFormation

- AWS Lambda

- AWS Event Bridge

- Amazon S3

Cost

You will be incurring charges on your AWS account while running this solution. Once you delete the cloud formation template, all services get removed from your environment, and your billing for the solution stops. As of 3rd November 2022, the cost for running this solution with the default settings in the Mumbai Region is approximately $211 / month.

Prices are subject to change. For details, refer AWS service pricing webpage.

Example cost table for Mumbai Region

- This estimate uses an ml.m5.large instance. However, instance type and actual performance depend highly on factors like model complexity, algorithm, input size, concurrency, and various other factors.

- For cost-efficient performance, you must load tests for proper instance size selection and use batch transform instead of real-time inference when possible.

| Cost Summary (Monthly) | ||

| Description | Service | Monthly cost ($) |

| Model Artifacts Bucket | S3 Standard | 2.55 |

| Model Build – CodePipeline | AWS CodePipeline | 1 |

| Model Build – CodeBuild | AWS CodeBuild | 1.5 |

| Parameter store to store data s3uri | Parameter Store | 0 |

| To Data Pre-Processing and model evaluation script | SageMaker Processing | 9.68 |

| To Model Training | SageMaker Training | 4.84 |

| To Deploy real time model | SageMaker Real-Time Inference | 180.05 |

| To Transform model evaluation data | SageMaker Batch Transform | 4.84 |

| Data Storage Bucket | S3 Standard | 2.55 |

| Model Deploy- CodePipeline | AWS CodePipeline | 1 |

| Model Deploy- CodeBuild | AWS CodeBuild | 1.5 |

| New data trigger lambda function | AWS Lambda | 0 |

| Model Build – Git Repository | AWS Code Commit | 1 |

| Model Deploy – Git Repository | AWS Code Commit | 1 |

| Email Notification Service | Amazon Simple Notification Service (SNS) | 0.38 |

| Total monthly Estimate | 211.89 |