Overview

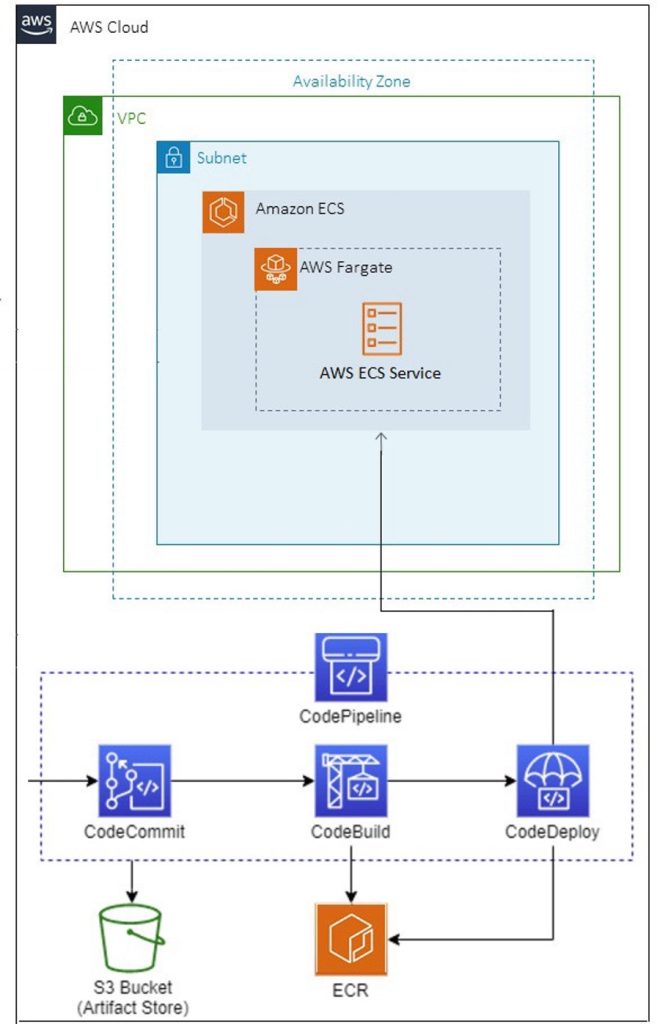

Nowadays, the majority of clients are migrating toward containerized infrastructure and workflows. Clients can build CI/CD pipelines for delivering containerized apps using the native tools and services provided by AWS. To build and deploy microservices on Amazon Elastic Container Service, this pattern explains how to create continuous integration and continuous delivery automatically (CI/CD) pipelines and supporting infrastructure (Amazon ECS). These CI/CD pipelines take updates from an AWS CodeCommit source repository, generate them automatically, and then deploy them to the appropriate environment.

In this blog post, we’ll build an end-to-end CI/CD solution for container deployments using the AWS native technologies listed below.

Pipeline components

The AWS pipeline includes the following elements:

· AWS Codecommit

· AWS CodeBuild

· AWS CodeDeply

· AWS CodePipeline

· AWS Artifact Bucket

Pre-requisites

- It is necessary to be familiar with Amazon Elastic Container Registry (Amazon ECR), Amazon ECS, and AWS Fargate.

- A basic understanding of CI/CD, DevOps, and Docker

- An AWS ECS repository (e.g., ecr-demo) needs to be created, and an Apache docker image must be pushed to the ECR repository.

- One ECS Fargate Cluster (e.g., web-cluster) is up and running with one ECS task definition (e.g., web-task) and one operational ECS service (e.g., web-service) using the previously prepared docker image.

Steps walkthrough

Create a CodeCommit repository

You can use it to manage and store your Git repositories in the AWS Cloud as a private version control service.

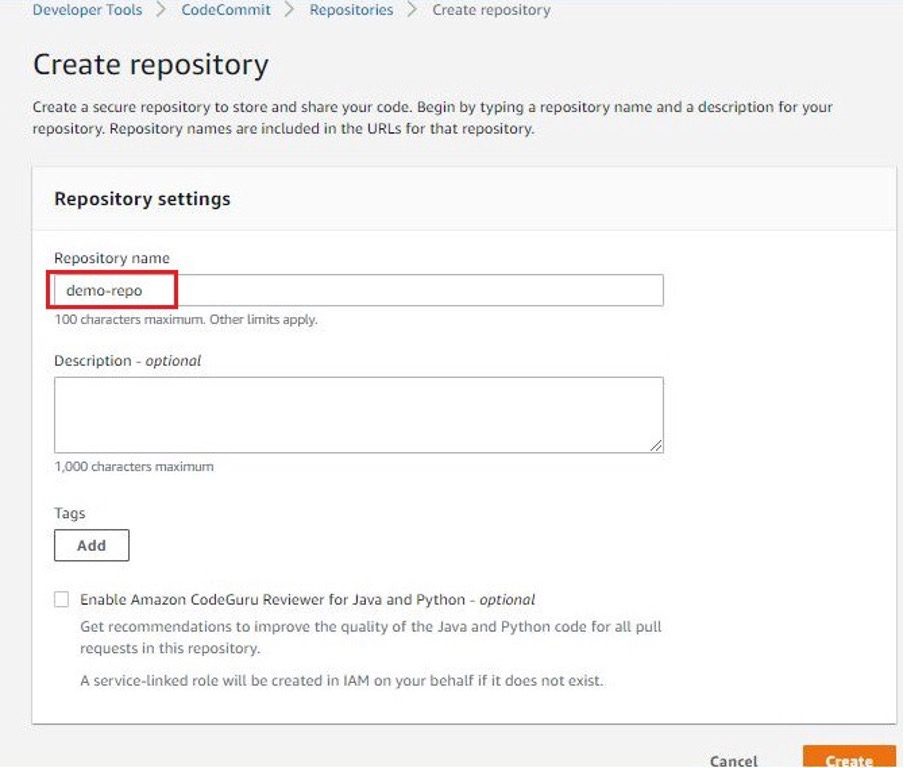

- Open the CodeCommit Dashboard in the AWS Console. Click Create repository on the Repositories page.

- Give your repository a name (such as demo-repo) then click Create on the Create repository screen.

3. The repository must have permissions added and Git credentials created after it has been made to access the CodeCommit.

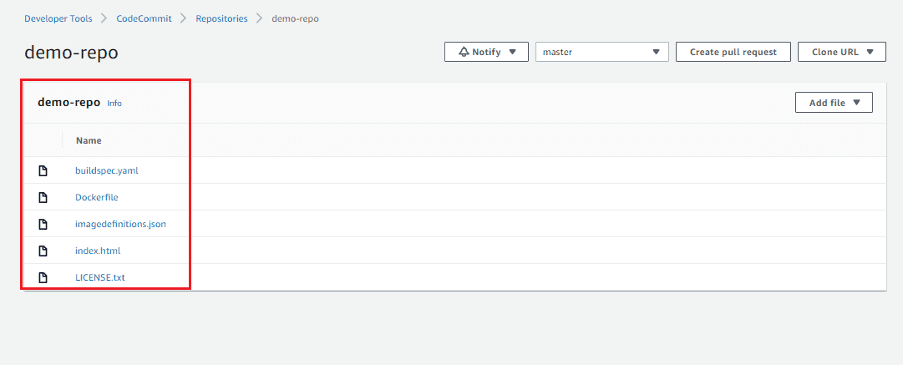

4. Upload the Docker code to the repository after that. Your CodeCommit repository will look like this after a successful code push. Sample source code link: https://github.com/RasanKhalsa/codepipeline_ecr.git

Creating a CodeBuild

It is a fully managed cloud-based build service. It compiles the source code, runs unit tests, and produces artifacts.

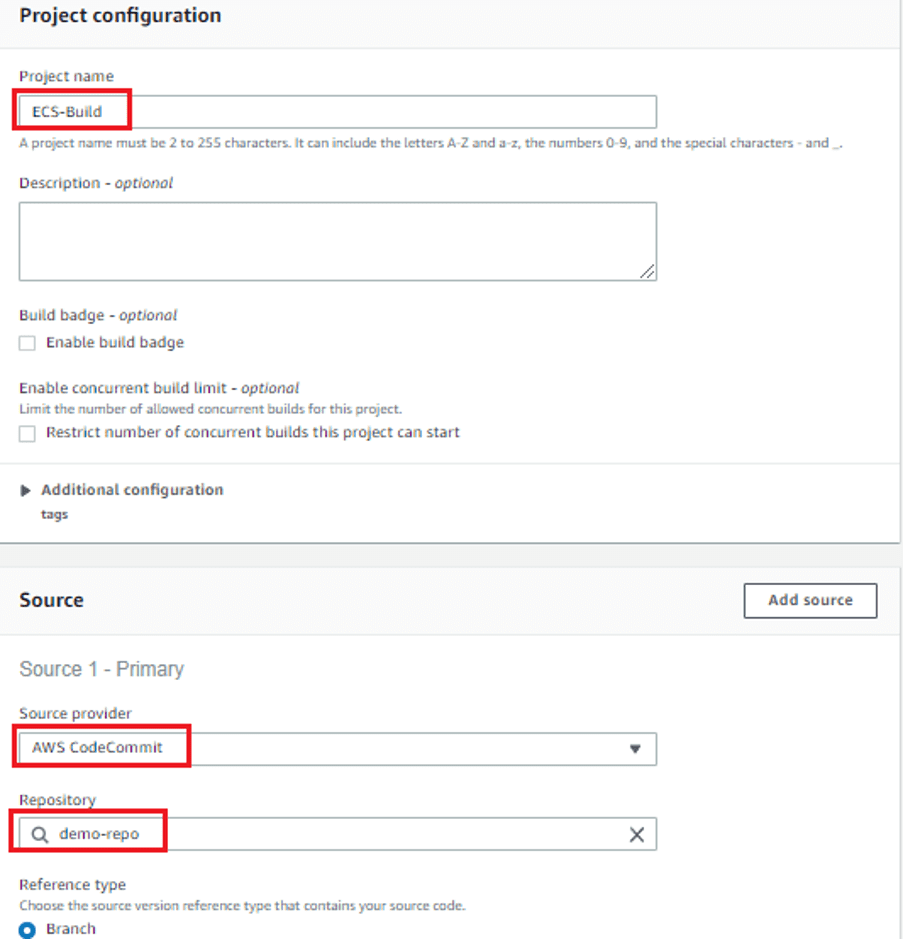

1. On the CodeBuild dashboard, click Create Build Project.

2. Specify the project name in the project configuration (ECS-Build, for example). Select AWS CodeCommit as your source provider, being sure to include the name of the repository and the branch details.

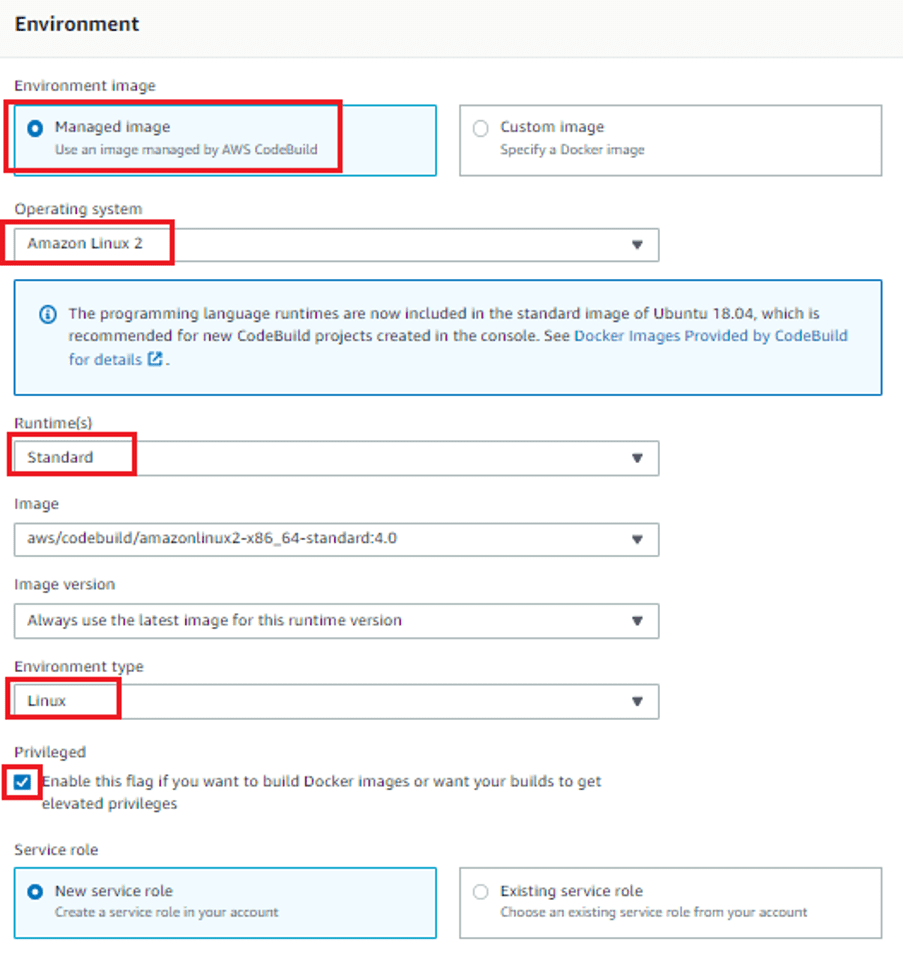

3. Choose Managed Image running the most recent version of the image and Amazon Linux 2 with runtime Standard in the environment settings. Verify that the checkbox for giving enhanced access to Docker builds is selected. Choose the new service role choice next. To build and push the Docker image, ensure the AWS Build role has AWS ECR capabilities.

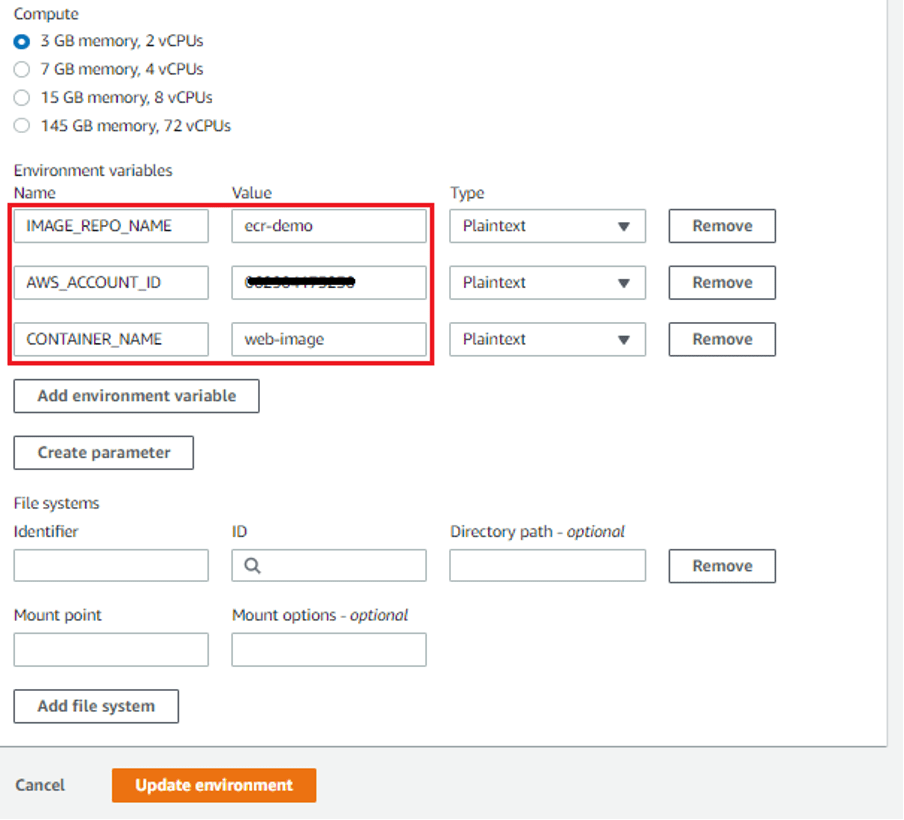

4. The following variables should be declared in Environment variables; they will be used in buidspec.yml.

5. Next on the list of parameters is the buildspec.yml file, which provides the commands used to compile, test, and package the code. For our needs, we’ll include instructions in the buildspec.yml file for containerizing, creating, and submitting a Docker image to ECR. It will look like this:

version: 0.2

phases:

pre_build:

commands:

– “echo Logging in to Amazon ECR…”

– “aws –version”

– “aws –version”

– REPOSITORY_URI=$AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazon.com/$IMAGE_REPO_NAME

– REPO=”$AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com”

– “COMMIT_HASH=$(echo $CODEBUILD_RESOLVED_SOURCE_VERSION | cut -c 1-7)”

– “IMAGE_TAG=build-$(echo $CODEBUILD_BUILD_ID | awk -F\”:\” ‘{print $2}’)”

– “echo image_tag $IMAGE_TAG”

– “$(aws ecr get-login –region $AWS_DEFAULT_REGION –no-include-email)” build:

commands:

– “echo Build started on `date`”

– “echo Building the Docker image…”

– “docker build -t $REPOSITORY_URI:latest .”

– “docker tag $REPOSITORY_URI:latest $REPOSITORY_URI:$IMAGE_TAG”post_build:

commands:

– “echo Build completed on `date`”

– “echo Pushing the Docker images…”

– “docker push $REPOSITORY_URI:$IMAGE_TAG”

– “echo Writing image definitions file…”

– “printf ‘[{\”name\”:\”%s\”,\”imageUri\”:\”%s\”}]’ $CONTAINER_NAME $REPOSITORY_URI:$IMAGE_TAG > imagedefinitions.json”

– “cat imagedefinitions.json”artifacts:

files: imagedefinitions.json

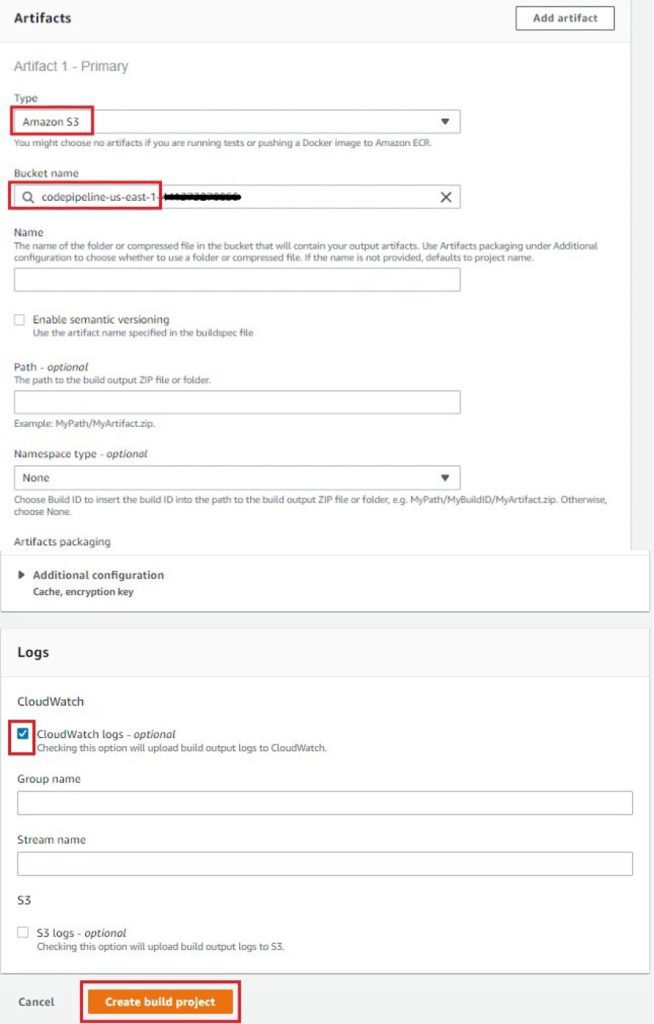

6. Select the Amazon S3 type and the bucket name where we will save the artifacts described in the buildspec under the Artifacts section. Leave the remaining parameters at their defaults and click Create build project.

Configure AWS CodePipeline for deployment

Our docker container will automatically build and deploy whenever the source repository is updated.

1. From the CodePipeline console in the AWS Console select Create pipeline.

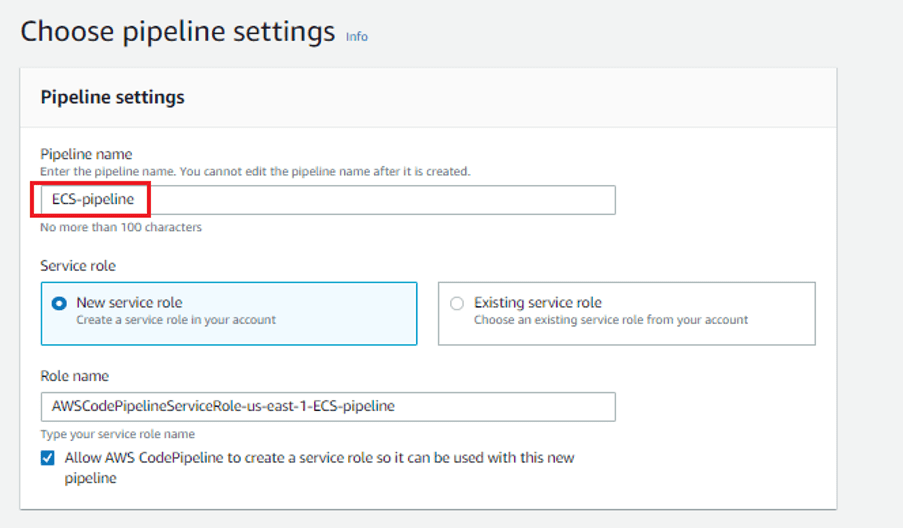

2. In the pipeline settings mention the pipeline name (e.g., ECS-pipeline). Select Next after leaving the advanced options’ default values in place.

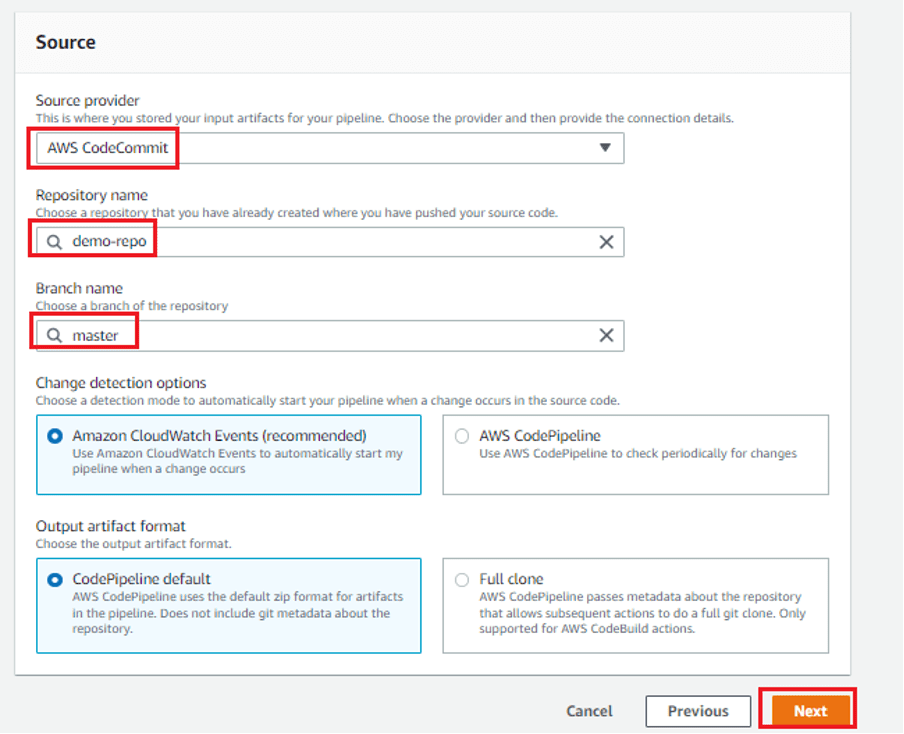

3. Select AWS CodeCommit from the Source provider drop-down menu in the source stage. By choosing Repository name (for example, demo-repo), select the repository name we previously made. Click “master” under the branch name. Leave everything alone under Change detection options. By choosing Next, CodePipeline will be able to use Amazon CloudWatch Events to find changes in your source repository.

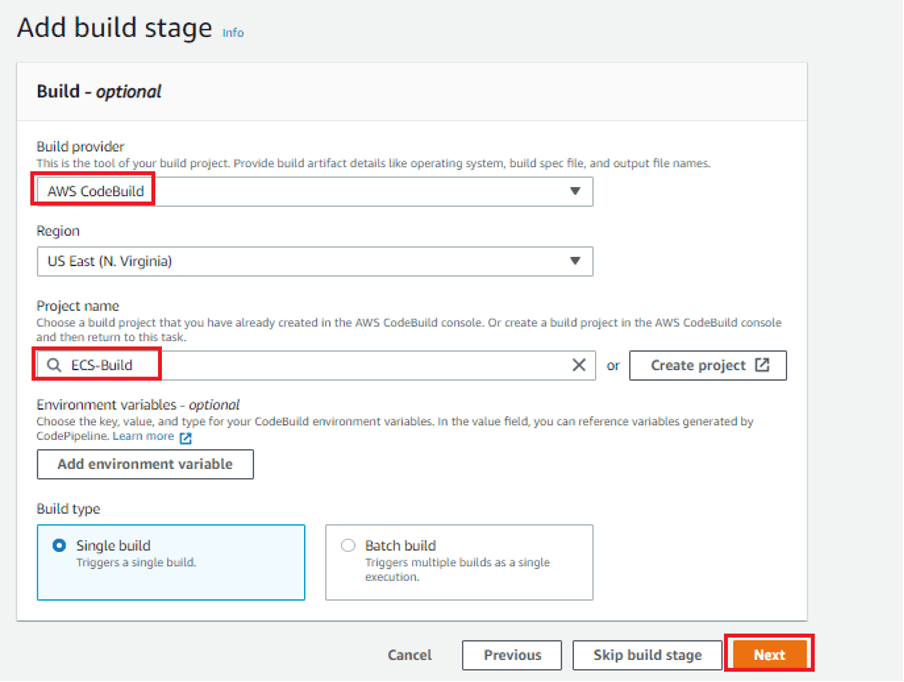

4. In the build stage, choose AWS CodeBuild. Mention the project was established in the earlier phase, such as ECS-Build. Add the parameters for the build stage, then select Next.

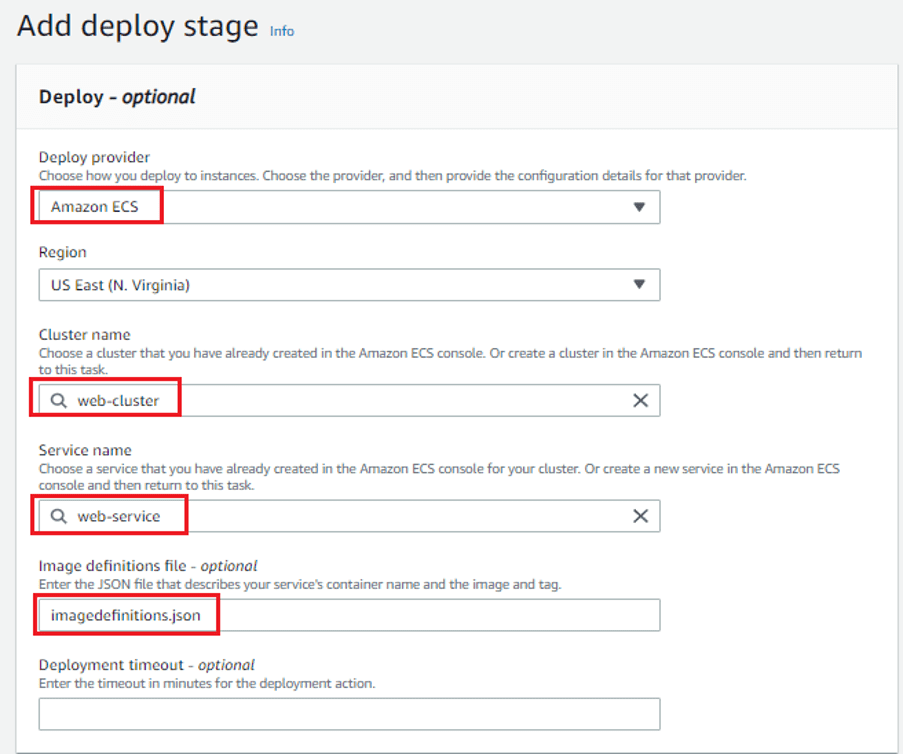

5. Select AWS ECS under Deploy provider in Add Deploy Stage. Select the name of the pre-requisite cluster (such as “web-cluster”) and service (such as “web-service”), and then include the name of the JSON file (such as “imagedefinations.json”) as part of the code for the code commit. An image definition document is a JSON file that lists the name of your Amazon ECS container, the picture, and the tag. Then click on Next.

6. Select Create pipeline after reviewing the data in the Review stage.

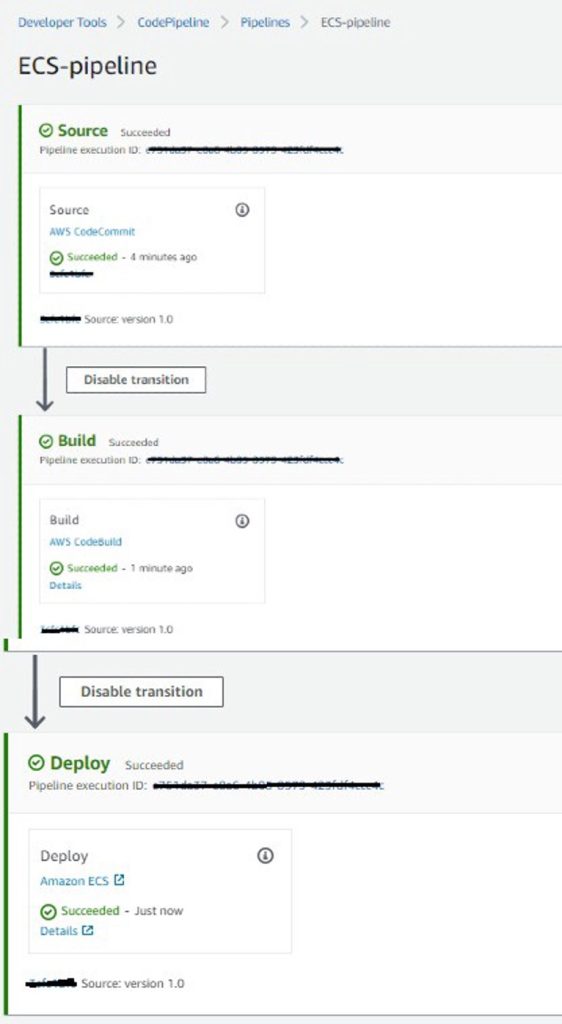

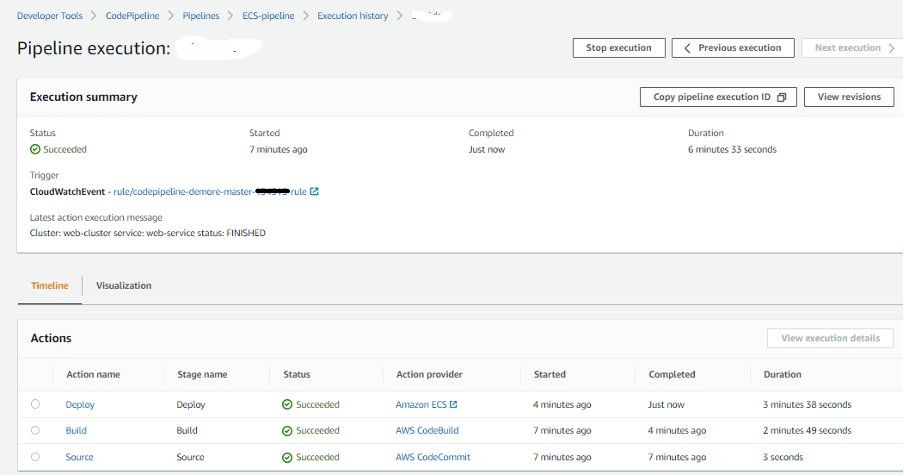

7. The pipeline will start after the creation phase is over. The CodeCommit repository hosts the code for download. Next, a Docker container with the modified image is built and deployed to the ECS Fargate service using CodeBuild and CodeDeploy. You may view the status, success, and failure messages on the AWS CodePipeline Console.

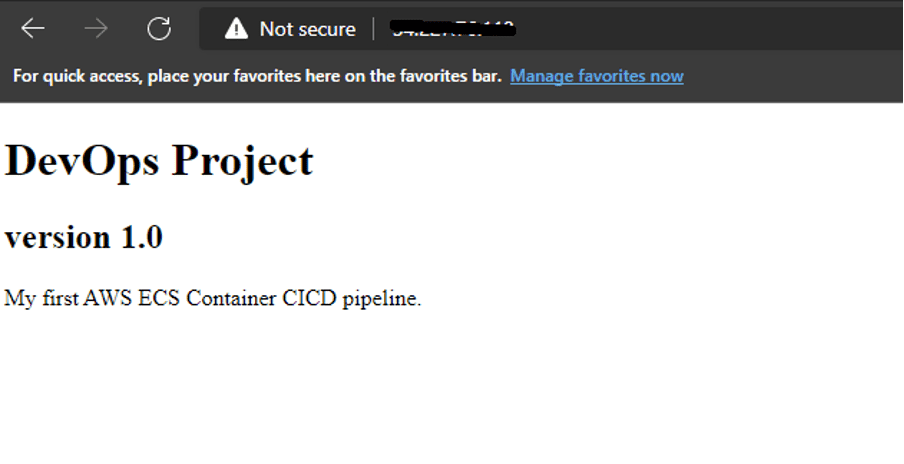

8. The results will then be verified. Paste the ECS Container’s public IP address after opening your web browser. The container web page is displayed in the browser.

9. After the pipeline has been finished, its execution logs are documented in the pipeline information. The pipeline’s separate steps, resources, and development can all be followed here.

We have created a CI/CD pipeline with the help of the AWS developer tools to deploy microservices on the Fargate ECS service. When a change is made to the source code in the CodeCommit repository, the pipeline will activate instantly and upload the updated Docker image to the AWS ECS fargate cluster.